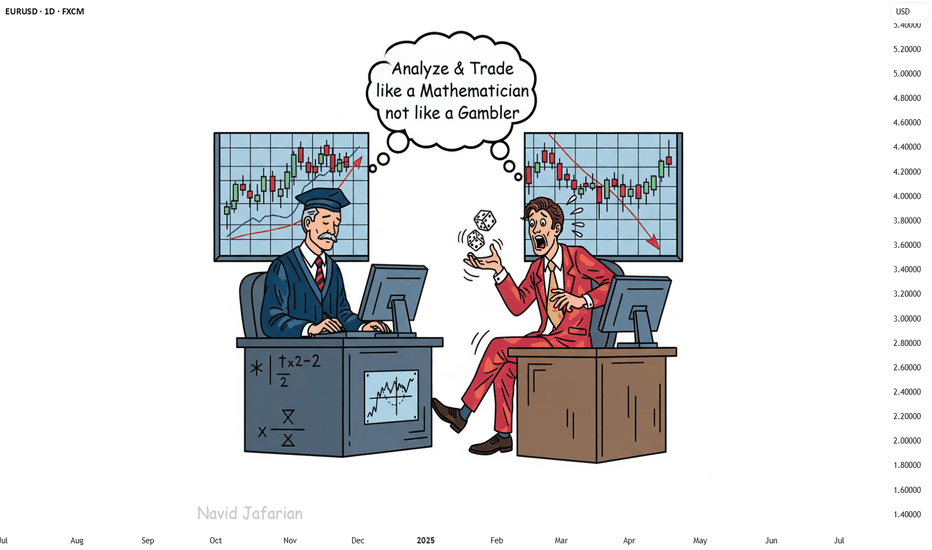

123 Quick Learn Trading Tips - Tip #7 - The Dual Power of Math123 Quick Learn Trading Tips - Tip #7

The Dual Power of Math: Logic for Analysis, Willpower for Victory

✅ An ideal trader is a mix of a sharp analyst and a tough fighter .

To succeed in the financial markets, you need both logical decision-making and the willpower to stay on track.

Mathematics is the perfect gym to develop both of these key skills at the same time.

From a logical standpoint, math turns your mind into a powerful analysis tool. It teaches you how to break down complex problems into smaller parts, recognize patterns, and build your trading strategies with step-by-step thinking.

This is the exact skill you need to deeply understand probabilities and accurately calculate risk-to-reward ratios. 🧠

But the power of math doesn't end with logic. Wrestling with a difficult problem and not giving up builds a steel-like fighting spirit. This mental strength helps you stay calm during drawdowns and stick to your trading plan.

"Analyze with the precision of a mathematician and trade with the fighting spirit of a mathematician 👨🏻🎓,

not with the excitement of a gambler 🎲. "

Navid Jafarian

Every tip is a step towards becoming a more disciplined trader.

Look forward to the next one! 🌟

Mathematics

Engineering the Hull‑style Exponential Moving Average (HEMA)▶️ Introduction

Hull’s Moving Average (HMA) is beloved because it offers near–zero‑lag turns while staying remarkably smooth. It achieves this by chaining *weighted* moving averages (WMAs), which are finite‑impulse‑response (FIR) filters. Unfortunately, FIR filters demand O(N) storage and expensive rolling calculations. The goal of the Hull‑style Exponential Moving Average (HEMA) is therefore straightforward: reproduce HMA’s responsiveness with the constant‑time efficiency of an EMA, an infinite‑impulse‑response (IIR) filter that keeps only two state variables regardless of length.

▶️ From FIR to IIR – What Changes?

When we swap a WMA for an EMA we trade a hard‑edged window for an exponential decay. This swap creates two immediate engineering challenges. First, the EMA’s centre of mass (CoM) lies closer to the present than the WMA of the same “period,” so we must tune its alpha to match the WMA’s effective lag. Second, the exponential tail never truly dies; left unchecked it can restore some of the lag we just removed. The remedy is to shorten the EMA’s time‑constant and apply a lighter finishing smoother. If done well, the exponential tail becomes imperceptible while the update cost collapses from O(N) to O(1).

▶️ Dissecting the Original HMA

HMA(N) is constructed in three steps:

Compute a *slow* WMA of length N.

Compute a *fast* WMA of length N/2, double it, then subtract the slow WMA. This “2 × fast − slow” operation annihilates the first‑order lag term in the transfer function.

Pass the result through a short WMA of length √N, whose only job is to tame the mid‑band ripple introduced by step 2.

Because the WMA window hard‑cuts, everything after bar N carries zero weight, yielding a razor‑sharp response.

▶️ Re‑building Each Block with EMAs

1. Slow leg .

We choose αₛ = 3 / (2N − 1) .

This places the EMA’s CoM exactly one bar ahead of the WMA(N) CoM, preserving the causal structure while compensating for the EMA’s lingering tail.

2. Fast leg .

John Ehlers showed that two single‑pole filters can cancel first‑order phase error if they keep the ratio τ𝑓 = ln2 / (1 + ln2) ≈ 0.409 τₛ .

We therefore compute α𝑓 = 1 − e^(−λₛ / 0.409) ,

where λₛ = −ln(1 − αₛ).

3. Zero‑lag blend .

Instead of Hull’s integer 2/−1 pair we adopt Ehlers’ fractional weights:

(1 + ln 2) · EMA𝑓 − ln 2 · EMAₛ .

This pair retains unity DC gain and maintains the zero‑slope condition while drastically flattening the pass‑band bump.

4. Finishing smoother .

The WMA(√N) in HMA adds roughly one and a half bars of consequential delay. Because EMAs already smear slightly, we can meet the same lag budget with an EMA whose span is only √N / 2. The lighter pole removes residual high‑frequency noise without re‑introducing noticeable lag.

▶️ Error Budget vs. Classical HMA

Quantitatively, HEMA tracks HMA to within 0.1–0.2 bars on the first visible turn for N between 10 and 50. Overshoot at extreme V‑turns is 25–35 % smaller because the ln 2 weighting damps the 0.2 fs gain peak. Root‑mean‑square ripple inside long swings falls by roughly 15–20 %. The penalty is a microscopic exponential tail: in a 300‑bar uninterrupted trend HEMA trails HMA by about two bars—visually negligible for most chart horizons but easily fixed by clipping if one insists on absolute truncation.

▶️ Practical Evaluation

Side‑by‑side plots confirm the math. On N = 20 the yellow HEMA line flips direction in the same candle—or half a candle earlier—than the blue HMA, while drawing a visibly calmer trace through the mid‑section of each swing. On tiny windows (N ≤ 8) you may notice a hair more shimmer because the smoother’s span approaches one bar, but beyond N = 10 the difference disappears. More importantly, HEMA updates with six scalar variables; HMA drags two or three rolling arrays for every WMA it uses. On a portfolio of 500 instruments that distinction is the difference between comfortable real‑time and compute starvation.

▶️ Conclusion

HEMA is not a casual “replace W with E” hack. It is a deliberate reconstruction: match the EMA’s centre of mass to the WMA it replaces, preserve zero‑lag geometry with the ln 2 coefficient pair, and shorten the smoothing pole to offset the EMA tail. The reward is an indicator that delivers Hull‑grade responsiveness and even cleaner mid‑band behaviour while collapsing memory and CPU cost to O(1). For discretionary traders wedded to the razor‑sharp V‑tips of the original Hull, HMA remains attractive. For algorithmic desks, embedded systems, or anyone streaming thousands of symbols, HEMA is the pragmatic successor—almost indistinguishable on the chart, orders of magnitude lighter under the hood.

Why Your EMA Isn't What You Think It IsMany new traders adopt the Exponential Moving Average (EMA) believing it's simply a "better Simple Moving Average (SMA)". This common misconception leads to fundamental misunderstandings about how EMA works and when to use it.

EMA and SMA differ at their core. SMA use a window of finite number of data points, giving equal weight to each data point in the calculation period. This makes SMA a Finite Impulse Response (FIR) filter in signal processing terms. Remember that FIR means that "all that we need is the 'period' number of data points" to calculate the filter value. Anything beyond the given period is not relevant to FIR filters – much like how a security camera with 14-day storage automatically overwrites older footage, making last month's activity completely invisible regardless of how important it might have been.

EMA, however, is an Infinite Impulse Response (IIR) filter. It uses ALL historical data, with each past price having a diminishing - but never zero - influence on the calculated value. This creates an EMA response that extends infinitely into the past—not just for the last N periods. IIR filters cannot be precise if we give them only a 'period' number of data to work on - they will be off-target significantly due to lack of context, like trying to understand Game of Thrones by watching only the final season and wondering why everyone's so upset about that dragon lady going full pyromaniac.

If we only consider a number of data points equal to the EMA's period, we are capturing no more than 86.5% of the total weight of the EMA calculation. Relying on he period window alone (the warm-up period) will provide only 1 - (1 / e^2) weights, which is approximately 1−0.1353 = 0.8647 = 86.5%. That's like claiming you've read a book when you've skipped the first few chapters – technically, you got most of it, but you probably miss some crucial early context.

▶️ What is period in EMA used for?

What does a period parameter really mean for EMA? When we select a 15-period EMA, we're not selecting a window of 15 data points as with an SMA. Instead, we are using that number to calculate a decay factor (α) that determines how quickly older data loses influence in EMA result. Every trader knows EMA calculation: α = 1 / (1+period) – or at least every trader claims to know this while secretly checking the formula when they need it.

Thinking in terms of "period" seriously restricts EMA. The α parameter can be - should be! - any value between 0.0 and 1.0, offering infinite tuning possibilities of the indicator. When we limit ourselves to whole-number periods that we use in FIR indicators, we can only access a small subset of possible IIR calculations – it's like having access to the entire RGB color spectrum with 16.7 million possible colors but stubbornly sticking to the 8 basic crayons in a child's first art set because the coloring book only mentioned those by name.

For example:

Period 10 → alpha = 0.1818

Period 11 → alpha = 0.1667

What about wanting an alpha of 0.17, which might yield superior returns in your strategy that uses EMA? No whole-number period can provide this! Direct α parameterization offers more precision, much like how an analog tuner lets you find the perfect radio frequency while digital presets force you to choose only from predetermined stations, potentially missing the clearest signal sitting right between channels.

Sidenote: the choice of α = 1 / (1+period) is just a convention from 1970s, probably started by J. Welles Wilder, who popularized the use of the 14-day EMA. It was designed to create an approximate equivalence between EMA and SMA over the same number of periods, even thought SMA needs a period window (as it is FIR filter) and EMA doesn't. In reality, the decay factor α in EMA should be allowed any valye between 0.0 and 1.0, not just some discrete values derived from an integer-based period! Algorithmic systems should find the best α decay for EMA directly, allowing the system to fine-tune at will and not through conversion of integer period to float α decay – though this might put a few traditionalist traders into early retirement. Well, to prevent that, most traditionalist implementations of EMA only use period and no alpha at all. Heaven forbid we disturb people who print their charts on paper, draw trendlines with rulers, and insist the market "feels different" since computers do algotrading!

▶️ Calculating EMAs Efficiently

The standard textbook formula for EMA is:

EMA = CurrentPrice × alpha + PreviousEMA × (1 - alpha)

But did you know that a more efficient version exists, once you apply a tiny bit of high school algebra:

EMA = alpha × (CurrentPrice - PreviousEMA) + PreviousEMA

The first one requires three operations: 2 multiplications + 1 addition. The second one also requires three ops: 1 multiplication + 1 addition + 1 subtraction.

That's pathetic, you say? Not worth implementing? In most computational models, multiplications cost much more than additions/subtractions – much like how ordering dessert costs more than asking for a water refill at restaurants.

Relative CPU cost of float operations :

Addition/Subtraction: ~1 cycle

Multiplication: ~5 cycles (depending on precision and architecture)

Now you see the difference? 2 * 5 + 1 = 11 against 5 + 1 + 1 = 7. That is ≈ 36.36% efficiency gain just by swapping formulas around! And making your high school math teacher proud enough to finally put your test on the refrigerator.

▶️ The Warmup Problem: how to start the EMA sequence right

How do we calculate the first EMA value when there's no previous EMA available? Let's see some possible options used throughout the history:

Start with zero : EMA(0) = 0. This creates stupidly large distortion until enough bars pass for the horrible effect to diminish – like starting a trading account with zero balance but backdating a year of missed trades, then watching your balance struggle to climb out of a phantom debt for months.

Start with first price : EMA(0) = first price. This is better than starting with zero, but still causes initial distortion that will be extra-bad if the first price is an outlier – like forming your entire opinion of a stock based solely on its IPO day price, then wondering why your model is tanking for weeks afterward.

Use SMA for warmup : This is the tradition from the pencil-and-paper era of technical analysis – when calculators were luxury items and "algorithmic trading" meant your broker had neat handwriting. We first calculate an SMA over the initial period, then kickstart the EMA with this average value. It's widely used due to tradition, not merit, creating a mathematical Frankenstein that uses an FIR filter (SMA) during the initial period before abruptly switching to an IIR filter (EMA). This methodology is so aesthetically offensive (abrupt kink on the transition from SMA to EMA) that charting platforms hide these early values entirely, pretending EMA simply doesn't exist until the warmup period passes – the technical analysis equivalent of sweeping dust under the rug.

Use WMA for warmup : This one was never popular because it is harder to calculate with a pencil - compared to using simple SMA for warmup. Weighted Moving Average provides a much better approximation of a starting value as its linear descending profile is much closer to the EMA's decay profile.

These methods all share one problem: they produce inaccurate initial values that traders often hide or discard, much like how hedge funds conveniently report awesome performance "since strategy inception" only after their disastrous first quarter has been surgically removed from the track record.

▶️ A Better Way to start EMA: Decaying compensation

Think of it this way: An ideal EMA uses an infinite history of prices, but we only have data starting from a specific point. This creates a problem - our EMA starts with an incorrect assumption that all previous prices were all zero, all close, or all average – like trying to write someone's biography but only having information about their life since last Tuesday.

But there is a better way. It requires more than high school math comprehension and is more computationally intensive, but is mathematically correct and numerically stable. This approach involves compensating calculated EMA values for the "phantom data" that would have existed before our first price point.

Here's how phantom data compensation works:

We start our normal EMA calculation:

EMA_today = EMA_yesterday + α × (Price_today - EMA_yesterday)

But we add a correction factor that adjusts for the missing history:

Correction = 1 at the start

Correction = Correction × (1-α) after each calculation

We then apply this correction:

True_EMA = Raw_EMA / (1-Correction)

This correction factor starts at 1 (full compensation effect) and gets exponentially smaller with each new price bar. After enough data points, the correction becomes so small (i.e., below 0.0000000001) that we can stop applying it as it is no longer relevant.

Let's see how this works in practice:

For the first price bar:

Raw_EMA = 0

Correction = 1

True_EMA = Price (since 0 ÷ (1-1) is undefined, we use the first price)

For the second price bar:

Raw_EMA = α × (Price_2 - 0) + 0 = α × Price_2

Correction = 1 × (1-α) = (1-α)

True_EMA = α × Price_2 ÷ (1-(1-α)) = Price_2

For the third price bar:

Raw_EMA updates using the standard formula

Correction = (1-α) × (1-α) = (1-α)²

True_EMA = Raw_EMA ÷ (1-(1-α)²)

With each new price, the correction factor shrinks exponentially. After about -log₁₀(1e-10)/log₁₀(1-α) bars, the correction becomes negligible, and our EMA calculation matches what we would get if we had infinite historical data.

This approach provides accurate EMA values from the very first calculation. There's no need to use SMA for warmup or discard early values before output converges - EMA is mathematically correct from first value, ready to party without the awkward warmup phase.

Here is Pine Script 6 implementation of EMA that can take alpha parameter directly (or period if desired), returns valid values from the start, is resilient to dirty input values, uses decaying compensator instead of SMA, and uses the least amount of computational cycles possible.

// Enhanced EMA function with proper initialization and efficient calculation

ema(series float source, simple int period=0, simple float alpha=0)=>

// Input validation - one of alpha or period must be provided

if alpha<=0 and period<=0

runtime.error("Alpha or period must be provided")

// Calculate alpha from period if alpha not directly specified

float a = alpha > 0 ? alpha : 2.0 / math.max(period, 1)

// Initialize variables for EMA calculation

var float ema = na // Stores raw EMA value

var float result = na // Stores final corrected EMA

var float e = 1.0 // Decay compensation factor

var bool warmup = true // Flag for warmup phase

if not na(source)

if na(ema)

// First value case - initialize EMA to zero

// (we'll correct this immediately with the compensation)

ema := 0

result := source

else

// Standard EMA calculation (optimized formula)

ema := a * (source - ema) + ema

if warmup

// During warmup phase, apply decay compensation

e *= (1-a) // Update decay factor

float c = 1.0 / (1.0 - e) // Calculate correction multiplier

result := c * ema // Apply correction

// Stop warmup phase when correction becomes negligible

if e <= 1e-10

warmup := false

else

// After warmup, EMA operates without correction

result := ema

result // Return the properly compensated EMA value

▶️ CONCLUSION

EMA isn't just a "better SMA"—it is a fundamentally different tool, like how a submarine differs from a sailboat – both float, but the similarities end there. EMA responds to inputs differently, weighs historical data differently, and requires different initialization techniques.

By understanding these differences, traders can make more informed decisions about when and how to use EMA in trading strategies. And as EMA is embedded in so many other complex and compound indicators and strategies, if system uses tainted and inferior EMA calculatiomn, it is doing a disservice to all derivative indicators too – like building a skyscraper on a foundation of Jell-O.

The next time you add an EMA to your chart, remember: you're not just looking at a "faster moving average." You're using an INFINITE IMPULSE RESPONSE filter that carries the echo of all previous price actions, properly weighted to help make better trading decisions.

EMA done right might significantly improve the quality of all signals, strategies, and trades that rely on EMA somewhere deep in its algorithmic bowels – proving once again that math skills are indeed useful after high school, no matter what your guidance counselor told you.

NVDIA Death Cross Quant Perspectives (Light Case Study)NASDAQ: Nvidia (NVDA ) has recently experienced an uptrend after a death cross formed consisting of the 65 and 200 EMAs on the 1 Day chart.

If we analyze back on Nvidia starting in 1999 , we can count a total of 10 death crosses that have occurred, and 9 have been immediately followed by downtrends. Although a single death cross did not have an immediate downtrend, shortly after this event (approx. 282 days) another death cross formed and price then fell roughly twice as it historically has , almost appearing to make up for the missed signal.

From a quantitative perspective:

If we calculate the raw historical success rate using:

Raw Success Rate = 9/10 = 90

With this calculation the observed success of 65/200 EMA death crosses correlating to an immediate downtrend is 90%

In order to avoid overconfidence we can apply Laplace smoothing using:

Smoothed Probability = 9+1/10+2 = 10/12 or 0.8333

With this calculation the observed success of 65/200 EMA death crosses correlating to an immediate downtrend is 83%

Given the results of the data I personally feel that there is a Very High (83%) chance this death cross that recently formed on the 1 Day chart (around 04/16/2025) will immediately lead to a downtrend. And a Low (17%) chance it does not. Furthermore these results support a technical analysis hypothesis that I formed prior.

Many different systemic factors can contribute to the market movement, but mathematics sometimes leave subtle clues. Will the market become bearish? Or will Nvidia gain renewed bullish interest?

Disclaimer: Not Financial Advice.

ETH Will Flip Bitcoin – Sooner Than You ThinkEveryone’s watching ETFs and halvings — but here’s what they’re not seeing:

Ethereum isn’t just a currency.

It’s digital infrastructure — powering AI, tokenized real estate, RWAs, and decentralized identity.

The next wave of global finance will run on Ethereum.

📈 My prediction:

By Q1 2026, ETH will flip BTC in market cap.

Not hype — mathematics + macro + mass adoption.

🧠 Smart money is already rotating.

Are you paying attention?

⏳ Don't say "nobody warned you."

Witness Magic of Statistical Models in Harmony with FibonacciWitness the Magic of Statistical Models in Harmony with Fibonacci Levels! ✨📊

Have you ever seen mathematical beauty unfold right before your eyes? 🤯 Imagine the elegance of statistical models perfectly aligning with Fibonacci levels—it's like witnessing a symphony of numbers! 🎶🔢

Fibonacci sequences, deeply rooted in nature and financial markets alike, provide a powerful tool for identifying key price levels. 📈✨ When combined with robust statistical models, they unveil patterns that might otherwise go unnoticed. The result? A mesmerizing blend of logic and intuition, precision and prediction! 🎯🔮

📌 Why is this combination so fascinating?

✅ Predictive Power – Fibonacci levels help anticipate market trends, while statistical models refine those predictions. 📊🔍

✅ Data-Driven Accuracy – Instead of relying on guesswork, this approach leverages solid mathematical foundations. 🏗️📈

✅ Aesthetic Elegance – There's something truly captivating about seeing numbers align so seamlessly! ✨🔢

So, what do you think of this remarkable harmony? 🤩 Let’s discuss in the comments! ⬇️

Ethereum (ETHUSD) : Time to Position for Profits!Ethereum (ETH/USD) is currently positioned for a bullish breakout, and I am excited to share my analysis with fellow traders.

Bullish Sentiment and Technical Setup

The current price action shows ETH testing the $4,000 resistance level, which, if broken, could trigger a wave of FOMO buying, propelling prices towards $4,500 and beyond. With the Relative Strength Index (RSI) reflecting strong buying momentum, now is an opportune time to consider long positions.

I will be utilizing probabilities to strategically enter into long trades, focusing on support levels around $3,600 to $3,700 as potential entry points during any pullbacks.

Several fundamental factors are contributing to this bullish outlook:

Institutional Interest: Recent reports indicate that BlackRock has made a significant investment in Ethereum, purchasing over $230 million worth. This institutional backing is a strong signal of confidence in Ethereum’s future.

Market Dynamics: The overall cryptocurrency market is experiencing renewed interest as Bitcoin has surpassed the psychological barrier of $100,000. Historically, Ethereum tends to follow Bitcoin's lead, and this correlation suggests that ETH could also see substantial upward movement.

Technological Advancements: Ethereum's ongoing developments in DeFi and NFT sectors continue to attract users and investors alike. The upcoming upgrades and increased adoption are likely to bolster its price further.

In summary, the combination of technical indicators pointing towards a bullish trend and supportive fundamentals creates a compelling case for trading ETH/USD long.

As we navigate this exciting market landscape, I encourage fellow traders to stay vigilant and consider positioning themselves for potential gains in the coming weeks.

P.S. If you have any questions about how I trade probabilities with the overall market direction, feel free to reach out.

Let's capitalize on this momentum together!

2W:

Hourly Timeframe Entry:

Bullish Outlook on XRPUSDKey Reasons for a Bullish Bias:

1. Positive Market Sentiment: XRP has recently broken through an important resistance level, which shows that traders are feeling optimistic about its future.

2. Bullish Technical Patterns: An inverted Head and Shoulders pattern has formed, suggesting that XRP might be ready for a price increase.

3. Improving Regulations: Recent developments in cryptocurrency regulations are becoming more favorable, which could attract more institutional investors to XRP.

I plan to use probabilities based on historical data and the X1X2 methodology to enter long positions in XRP. Here’s why:

- Learning from the Past: By looking at past price movements and historical data of XRP, I can spot biases that might help predict future behavior.

- X1X2 Methodology: This method helps me identify key price levels to enter and exit trades, making my strategy more focused.

- Smart Risk Management: By using probabilities, I can set stop-loss orders at strategic points, reducing my risk and making more informed decisions.

In summary, with a positive market outlook and a solid trading strategy based on historical data and mathematical rules, I’m confident in taking long positions in XRPUSD.

Traders, if you found this idea helpful or have your own thoughts on it, please share in the comments. I’d love to hear from you!

12M:

2W:

1H:

Probabilities Powering BTCUSD TradesUtilizing probabilities based on historical data is a cornerstone of my bullish strategy for BTCUSD. Here’s why I believe this approach is not only effective but essential for positioning long trades successfully.

Understanding the Importance of Probabilities

Probabilities in Trading

Trading is inherently uncertain, and relying on probabilities allows traders to make informed decisions rather than guesses. By analyzing historical price movements and patterns, we can identify trends that have previously led to upward or downward movements. This statistical approach helps mitigate risks associated with emotional decision-making.

Historical Data as a Guide

Historical data provides a wealth of information about how BTCUSD has reacted under various market conditions. By employing a mechanical trading strategy that incorporates these indicators, I can increase my chances of entering profitable trades.

Mechanical Trading Strategy

What is a Mechanical Trading Strategy?

A mechanical trading strategy is a systematic approach that uses predefined rules based on historical data to make trading decisions. This method eliminates emotional bias and ensures consistency in trade execution.

Benefits of a Mechanical Approach

1. Consistency: Adhering to a mechanical strategy means that trades are executed based on data rather than emotions.

2. Backtesting: Historical data allows for backtesting strategies to see how they would have performed in the past, providing confidence in their potential effectiveness.

3. Risk Management: By employing probabilities, I can better manage risk through calculated position sizing and stop-loss orders.

Current Market Context

In the current market environment, BTCUSD shows signs of bullish momentum. The formation of higher lows indicates strength, and historical patterns suggest that we may be at the beginning of another significant upward trend. By leveraging probabilities derived from past performance, I am positioning myself to capitalize on this potential movement.

Conclusion

In summary, utilizing probabilities based on historical data through a mechanical trading strategy equips me with a robust framework for entering long positions in BTCUSD. This approach not only enhances my decision-making process but also aligns with my overall bullish bias. As we navigate the complexities of the crypto market, relying on data-driven strategies will be crucial for achieving success in our trades.

1D:

6H:

LTCUSD: Strong Bullish Momentum with 68.87% Probability for TP1!I’m optimistic about Litecoin (LTCUSD) right now, and here are some compelling reasons to consider this trade:

- Market Recovery: The overall cryptocurrency market is bouncing back, with many coins, including Litecoin, showing positive price movements after recent dips.

- Growing Adoption: More people and businesses are starting to use cryptocurrencies for transactions, which could increase demand for Litecoin.

- Tech Improvements: Litecoin is undergoing updates that make it more efficient and user-friendly, attracting more interest.

- Positive Sentiment: Many analysts are optimistic about the future of cryptocurrencies, suggesting that prices could continue to rise.

To get positioned for long trades on LTCUSD, I rely on probabilities based on historical data in a mechanical trading system.

In short, my bullish outlook on LTCUSD is supported by strong market fundamentals, and by using probabilities from historical data, I aim to position myself effectively for potential long trades.

Please share your ideas and charts in the comments section below!

12M:

2W:

6H:

Why I’m Betting Bearish on GBPNZD: Key Market Drivers ExplainedAs I prepare to share my trade idea for GBPNZD, my overall bias is bearish. Here are some key fundamentals currently influencing this outlook:

1. UK Economic Slowdown: The UK is facing economic challenges, with high inflation and downgraded growth forecasts. This situation tends to weaken the British Pound against other currencies, including the New Zealand Dollar.

2. RBNZ's Hawkish Stance: The Reserve Bank of New Zealand (RBNZ) is likely to maintain a strong monetary policy, focusing on controlling inflation. This contrasts sharply with the UK's more cautious approach, which supports a stronger NZD.

3. Seasonal Trends: Historically, GBPNZD has shown a bearish trend from mid-August through December. This seasonal behavior suggests that now is an opportune time to consider short positions.

In my trading strategy for GBPNZD, I rely on probabilities to guide my decisions for entering short positions.

In summary, by leveraging probabilities based on historical data and current market fundamentals, I aim to position myself advantageously for short trades on GBPNZD.

This disciplined approach aligns with my bearish outlook and enhances my trading effectiveness.

I look forward to sharing my journey in this trade and welcome any thoughts or feedback!

2W:

Hourly TF:

The Formula That Helped Me Get Into in the Top 2% of TradersI spent years testing different strategies, obsessing over charts, and trying to find the perfect entry point. It took me a while to realize that it wasn’t just about picking the right trades—it was about knowing how much to risk on each trade. This is where the Kelly Criterion came into play and changed my entire approach.

You’ve probably heard the saying, “Don’t put all your eggs in one basket.” Well, Kelly Criterion takes that idea and puts some hard math behind it to tell you exactly how much you should risk to maximize your long-term growth. It’s not a guessing game anymore—it’s math, and math doesn’t lie.

What is Kelly Criterion?

The Kelly Criterion is a formula that helps you figure out the optimal size of your trades based on your past win rate and the average size of your wins compared to your losses. It’s designed to find the perfect balance between being aggressive enough to grow your account but cautious enough to protect it from major drawdowns.

F = W - (1 - W) / R

F is the fraction of your account you should risk.

W is your win rate (how often you win).

R is your risk/reward ratio (the average win relative to the average loss).

Let’s break it down.

How It Works

Let’s say you have a strategy that wins 60% of the time (W = 0.6), and your average win is 2x the size of your average loss (R = 2). Plugging those numbers into the formula, you’d get:

F = 0.6 - (1 - 0.6) / 2

F = 0.6 - 0.4 / 2

F = 0.6 - 0.2 = 0.4

So, according to Kelly, you should risk 40% of your account on each trade. Now, 40% might seem like a lot, but this is just the theoretical maximum for optimal growth.

The thing about using the full Kelly Criterion is that it’s aggressive. A 40% recommended risk allocation, for example, can be intense and lead to significant drawdowns, which is why many traders use half-Kelly, quarter-Kelly or other adjustments to manage risk. It’s a way to tone down the aggressiveness while still using the principle behind the formula.

Personally, I don’t just take Kelly at face value—I factor in both the sample size (which affects the confidence level) and my max allowed drawdown when deciding how much risk to take per trade. If the law of large numbers tells us we need a good sample size to align results with expectations, then I want to make sure my risk management accounts for that.

Let’s say, for instance, my confidence level is 95% (which is 0.95 in probability terms), and I don’t want to allow my account to draw down more than 10%. We can modify the Kelly Criterion like this:

𝑓 = ( ( 𝑊 − 𝐿 ) / 𝐵 )× confidence level × max allowed drawdown

Where:

𝑊 = W is your win probability,

𝐿 = L is your loss probability, and

𝐵 = B is your risk-reward ratio.

Let’s run this with actual numbers:

Suppose your win probability is 60% (0.6), loss probability is 40% (0.4), and your risk-reward ratio is still 2:1. Using the same approach where the confidence level is 95% and the max allowed drawdown is 10%, the calculation would look like this:

This gives us a risk percentage of 0.95% for each trade. So, according to this adjusted Kelly Criterion, based on a 60% win rate and your parameters, you should be risking just under 1% per trade.

This shows how adding the confidence level and max drawdown into the mix helps control your risk in a more conservative and tailored way, making the formula much more usable for practical trading instead of over-leveraging.

Why It’s Powerful

Kelly Criterion gives you a clear, mathematically backed way to avoid overbetting on any single trade, which is a common mistake traders make—especially when they’re chasing losses or getting overconfident after a win streak.

When I started applying this formula, I realized I had been risking too much on bad setups and too little on the good ones. I wasn’t optimizing my growth. Once I dialed in my risk based on the Kelly Criterion, I started seeing consistent growth that got me in the top 2% of traders on TradingView leap competition.

Kelly in Action

The first time I truly saw Kelly in action was during a winning streak. Before I understood this formula, I’d probably have gotten greedy and over-leveraged, risking blowing up my account. But with Kelly, I knew exactly how much to risk each time, so I could confidently scale up while still protecting my downside.

Likewise, during losing streaks, Kelly kept me grounded. Instead of trying to "make it back" quickly by betting more, the formula told me to stay consistent and let the odds play out over time. This discipline was key in staying profitable and avoiding big emotional trades.

Practical Use for Traders

You don’t have to be a math genius to use the Kelly Criterion. It’s about taking control of your risk in a structured way, rather than letting emotions guide your decisions. Whether you’re new to trading or have been in the game for years, this formula can be a game-changer if applied correctly.

Final Thoughts

At the end of the day, trading isn’t just about making the right calls—it’s about managing your risks wisely. The Kelly Criterion gives you a clear path to do just that. By understanding how much to risk based on your win rate and risk/reward ratio, you’re not just gambling—you’re playing a game with a serious edge.

So, whether you’re in a winning streak or facing some tough losses, keep your cool. Let the Kelly formula take care of your risk calculation.

If you haven’t started using the Kelly Criterion yet, now’s the time to dive in. Calculate your win rate, figure out your risk/reward ratio, and start applying it.

You’ll protect your account while setting yourself up for long-term profitability.

Trust me, this is the kind of math that can change the game for you.

Bonus: Custom Kelly Criterion Function in Pine Script

If you’re ready to take your trading to the next level, here’s a little bonus for you!

I’ve put together a custom Pine Script function that calculates the optimal risk percentage based on the Kelly Criterion.

You can easily enter the variables to fit your trading strategy.

// @description Calculates the optimal risk percentage using the Kelly Criterion.

// @function kellyCriterion: Computes the risk per trade based on win rate, loss rate, average win/loss, confidence level, and maximum drawdown.

// @param winRate (float) The probability of winning trades (0-1).

// @param lossRate (float) The probability of losing trades (0-1).

// @param avgWin (float) The average win size in risk units.

// @param avgLoss (float) The average loss size in risk units.

// @param confidenceLevel (float) Desired confidence level (0-1).

// @param maxDrawdown (float) Maximum allowed drawdown (0-1).

// @returns (float) The calculated risk percentage for each trade.

kellyCriterion(winRate, lossRate, avgWin, avgLoss, confidenceLevel, maxDrawdown) =>

// Calculate Kelly Fraction: Theoretical fraction of the bankroll to risk

kellyFraction = (winRate - lossRate) / (avgWin / avgLoss)

// Adjust the risk based on confidence level and maximum drawdown

adjustedRisk = (kellyFraction * confidenceLevel * maxDrawdown)

// Return the adjusted risk percentage

adjustedRisk

Use this function to implement the Kelly Criterion directly into your trading setup. Adjust the inputs to see how your risk percentage changes based on your trading performance!

How to dollar cost averge with precisionI've seen several dollar cost averaging calculator online, however there is something I usually see missing. How many stocks should you buy if you want your average cost to be a specific value. Usually the calculators will ask how much you bought at each level ang give you the average, but not the other way around (telling you how much to buy to make your average a specific value). For this, I decided to make the calculations on my own.

Here, you can see the mathematical demonstration: www.mathcha.io

$ETH and $BTC Price Level in USD to achieve $ETHBTC ATHI'm going to put this straight forward simple.

BINANCE:ETHBTC , essentially representing the price ratio of Ethereum to Bitcoin, serves as a key indicator of market dynamics between these two leading cryptocurrencies.

Due to the recent Break Of Structure on this Chart, I was curious enough, at what prices are we looking at in USD, in order for the ATH to break.

Last ATH was on June 12th, 2017. Prices at that ATH were following:

ETH: $414.8

BTC: $2980

According to my beloved friends ChatGPT, he could give me many scenarious, at which the ATH at 0.15636 would have be broken. Regarding of the multiplier, you get a different answer, here few very possible for me at this stage of market.

Multiplier: 1.5

New Price of ETH: $3,766

New Price of BTC: $24,085

Multiplier: 1.7

New Price of ETH: $4,269

New Price of BTC: $27,302

Multiplier: 1.9

New Price of ETH: $4,771

New Price of BTC: $30,513

Multiplier: 2.0

New Price of ETH: $5,022

New Price of BTC: $32,118

Multiplier: 2.2

New Price of ETH: $5,524

New Price of BTC: $35,329

Multiplier: 2.4

New Price of ETH: $6,026

New Price of BTC: $38,539

This might be the biggest signal, showing Ethereum has a lot of potential in the upcoming Altcoin Season / Bullmarket.

Not trying to convince anyone, just speculating on some interesting numbers.

Feel free to come up with more different scenarious. 100k for BTC & 15k for ETH might also be possible :D

Triangle formation forms harmonic pattern with chartUsing the peak of our latest high we can form a triangle that has not been broken out of. The volume profile aligns with the centre of the triangle and provides another line of resistance if BTC breaks up. Similarly the volume shows us how BTC might have multiple areas of resistance of it the breaks down. You’re welcome!

While Everyone is Selling EUR/JPY, It Looks Like It Will RetraceIf you trust math and geometry then there should be a small retracement for eur/jpy. Harmonics indicator says there will be a small push up, taking out many stop losses of those who expect it to continue falling. Big banks will profit, and then continue the actual direction - down.

What do you think?

Scott Carney's "Deep Crab" & the Fields Medal in MathematicsQ: What does the former have to do with the later?

A: The intuition in the former (S. Carney) is born out by the later (A. Avila; Fields Medal - 2014)

From Scott Carney's website;

---------------------------------------------------------------------------------------------------------

"Harmonic Trading: Volume One Page 136

The Deep Crab Pattern™, is a Harmonic pattern™ discovered by Scott Carney in 2001.

The critical aspect of this pattern is the tight Potential Reversal Zone created by the 1.618 of the XA leg and an extreme (2.24, 2.618, 3.14, 3.618) projection of the BC leg but employs an 0.886 retracement at the B point unlike the regular version that utilizes a 0.382-0.618 at the mid-point. The pattern requires a very small stop loss and usually volatile price action in the Potential Reversal Zone."

---------------------------------------------------------------------------------------------------------

From Artur Avila's Fields Medal Citation;

---------------------------------------------------------------------------------------------------------

"Artur Avila is awarded a Fields Medal for his profound contributions to dynamical systems theory, which have changed the face of the field, using the powerful idea of renormalization as a unifying principle.

Description in a few paragraphs:

Avila leads and shapes the field of dynamical systems. With his collaborators, he has made essential progress in many areas, including real and complex one-dimensional dynamics, spectral theory of the one-frequency Schrödinger operator, flat billiards and partially hyperbolic dynamics.

Avila’s work on real one-dimensional dynamics brought completion to the subject, with full understanding of the probabilistic point of view, accompanied by a complete renormalization theory. His work in complex dynamics led to a thorough understanding of the fractal geometry of Feigenbaum Julia sets.

In the spectral theory of one-frequency difference Schrödinger operators, Avila came up with a global description of the phase transitions between discrete and absolutely continuous spectra, establishing surprising stratified analyticity of the Lyapunov exponent."

---------------------------------------------------------------------------------------------------------

The connection here, as it is related to the specific "Deep Crab" harmonic pattern in trading, between intuition and general, analytical result, is illustrated somewhat simplified (but without distortion).

In essence, Avila has shown that in dynamical systems, in the neighborhood of phase-transitions in the case of one-dimensional (such as: Price) unimodal distributions, after the onset of chaos, there are islands of stability surrounded nearly entirely by parameters that give rise to stochastic behavior where transitions are Cantor Maps - i.e., fractal.

From that point it is an obvious next step to generalize to other self-affine fractal curves , such as the blancmange curve , which is a special case of w=1/2 of the general form: the Takagi–Landsberg curve. The "Hurst exponent"(H) = -log2(w) , which is the measure of the long-term-memory of a time series .

Putting it all together, it is not pure coincidence that a reliable pattern (representation) emerges from intuition (observation) which proves to be a highly stable (reliable) pattern that is most often the hallmark of a near-term, violent transition.

BTC to 40k by 2024? Algo Alert's take from a qualitative POVIntroduction:

The world of cryptocurrencies has always been accompanied by speculation and predictions about their future prices. One popular model that has gained attention is the LGS2F (Limited Growth Stock-to-Flow) model, which presents a modified version of the original stock-to-flow model for predicting Bitcoin prices. In this blog, we will delve into the LGS2F model and its implications for Bitcoin price predictions.

Understanding the LGS2F Model:

The LGS2F model acknowledges the limitations of the original stock-to-flow model, which projected an infinite growth trajectory for Bitcoin prices. This new model takes a more conservative approach by incorporating the concept of limited growth. By doing so, it aims to provide more realistic predictions that align with the inherent characteristics of Bitcoin.

Limited Growth Concept:

The concept of limited growth implies that the price of Bitcoin will not skyrocket indefinitely but will experience more moderate growth over time. This idea reflects the understanding that as Bitcoin matures and gains wider adoption, its growth potential becomes constrained by various factors such as market saturation, regulatory influences, and competition from other cryptocurrencies.

Predictions for Bitcoin Price:

According to the LGS2F model, Bitcoin is projected to reach a price of around 40,000 USD by the end of 2024. This prediction suggests a more measured growth pattern compared to previous models, which envisioned exponential price increases. The modified model takes into account the increasing scarcity of Bitcoin as well as its growing acceptance in various industries and financial markets.

Factors Influencing the Predictions:

The LGS2F model considers several key factors that impact Bitcoin price predictions:

Stock-to-Flow Ratio: The stock-to-flow ratio is a measure of scarcity that compares the existing supply of Bitcoin (stock) to the newly generated supply (flow) each year. It plays a crucial role in the model's calculations and reflects Bitcoin's limited supply.

Market Dynamics: The model takes into account market dynamics, including investor sentiment, market cycles, macroeconomic conditions, and regulatory developments. These factors can influence the demand for Bitcoin and consequently affect its price.

Adoption and Integration: As Bitcoin gains wider adoption and integration into mainstream financial systems, its perceived value and utility increase. The LGS2F model considers the impact of adoption and integration on price predictions.

Conclusion:

The LGS2F model provides a modified approach to Bitcoin price predictions by incorporating the concept of limited growth. Its projection of Bitcoin reaching around 40,000 USD by the end of 2024 reflects a more conservative estimate compared to previous models. However, it's important to remember that cryptocurrency markets are inherently volatile and subject to numerous unpredictable factors.

As with any predictive model, it's crucial to approach Bitcoin price predictions with caution and consider them alongside other fundamental and technical analysis tools. The LGS2F model offers a fresh perspective that acknowledges the evolving nature of Bitcoin and provides a more realistic framework for understanding its future price movements.

read more about the Limited Growth Stock to Flow model: medium.com

The Ultimate Algorand (ALGO) Analysis - Bottom $0.1618On the 22nd June 2019, Algorand opened at a price of around $3.28 on Coinbase, and slightly higher on Binance.

Over the next few months, it dropped to around $0.1648 (maybe $0.1618 on some exchanges) and then $0.097 at the Covid crisis.

Before the 2021 bull run, in November, ALGO's Support level was around $0.2247 (Point X of the harmonic) before it began its ascend.

In early February of 2021, ALGO topped around $1.8427 (Point A of the harmonic)

This increase is by an exact amount of $1.618, the main number in the Fibonacci sequence.

Coincidence? I don't think so.

After it dropped to Point B of the harmonic, around $0.67, which is a very strong Support/Resistance level.

Notice the number - 0.67 is exactly 2/3 of 100.

If I multiply 0.6667 by 0.6667 I get 0.44444.

0.6667 - 0.44444 = 0.223, the EXACT NUMBER of Algorand's Support level before the bullrun.

OK, now this is getting crazy.

Algorand then increased by 161.8% (A-B) to create Point C (around 2.5589).

It then dropped to around $1.5144 - the 0.444 support level (which I have marked "S"). (Remember that 0.4444 number from earlier? Yeah.....)

The price was then manipulated up to around $2.99-$3.

This manipulation point is a whole new conversation involved with even more complex numbers and I think its best we avoid this in this argument, since it doesn't affect this current idea.

ANYWAY, if we ignore the manipulation which we usually do in these circumstances and create Point C as our harmonic level, we can see that BC is a +1.618% of AB.

Now if we draw a fib between ZERO and A we get 0.618 which is at point B

OR

if we draw a fib between $0.223 (Start of 2021 bull run) and $1.84 ish (Point A), we get the retracement value around 0.707 which is half of the value of 1.414, and 1.414 is the square root of 2.

So AB is (XA x half of the square root of 2) and the next move entails a 1.618 move of that figure.

Crazy maths...

Anyway, In a standard AB=CD HARMONIC PATTERN, we have 3 different variations, AB=CD, AB=CDx1.272 or AB=CDx1.618.

The most common one is 1.272, which is the square root of 1.618.

Now what happens if we measure BC x 1.272?

The answer is a price of ALGO of $0.1618.

As soon as I saw that it hit me.

That's the bottom.

$0.1618, the Fibonacci golden number will likely be the bottom of Algorand in this cycle.

So what is the profit target?

So I checked a few measurements.

I tried CD x 1.618 (if we hypothetically say that $0.1618 is the bottom of Algorand this cycle) and that gave me a figure of around $4.03.

I also did (All Time High minus All Time Low) x 1.272 (the square root of 1.618)

and that gave me a similar figure of around $4.03.

OH ALSO, one last thing...

Algorand is currently in a Bear Flag, the target is around $0.223-0.226 to Buy the bounce. It will go lower around Christmas time, but if you look at the 1.414 level (square root of 2) of the Bear Flag, it also reaches the same point around $0.1618!